IMPORTANT UPDATE AT THE END OF THE ARTICLE

In my lab I use to test and play with numerous VMware solutions, I have several nested ESXi servers running. Nested ESXi servers are ESXi servers running as a VM. This is a not supported option, but it does help me to test and play around with software without having to rebuild my physical lab environment all the time.

So first a little on the setup of my nested ESXi servers

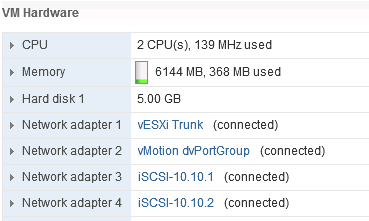

The VM’s for my nested ESXI servers have 4 NIC’s

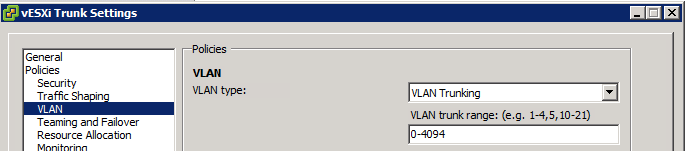

The first NIC connects to “vESXi Trunk” This is a port group on my physical ESXi hosts that is configured on a vDS with VLAN type “VLAN Trunking” so I get all VLAN’s in my nested ESXi host:

I use this VLAN trunk to present my management network and my VM networks to my nested ESXi servers

I also have a NIC that connects to my vMotion network, and two nice that connect to my iSCSI networks. I use two subnets and two VLAN’s for my iSCSI connections.

In my physical setup I use jumbo frames in these networks, and I did the same in my nested ESXi hosts, and it worked perfectly … Until I upgraded my nested ESXi hosts to vSphere 5.5 …

After the update with update manager finished, my VM’s came back up without access to my iSCSI storage, and behaving very strangely …

This is when I started troubleshooting

First I logged in to my nested ESXi server, and tried to vmkping an IP address on my iSCSI array. Even with non jumbo packets (1472 bytes or smaller) I saw a lot of packet loss.

And vmkpings with 8972 bytes where even worse

On my physical ESXi hosts and network, I could not find any specific issues.

When looking at the vmmic counters on the nested ESXi host with “ethtool -S vmnic2” I did notice the “alloc_rx_buff_failed” counters where increasing, even when I did not try to send traffic over the interface.

My other networks on the nested ESXi hosts dit not seem to have any issues. The only difference between these networks and my iSCSI networks was the iSCSI networks had jumbo frames configured.

So I decided to change the MTU for the iSCSI setup from 9000 to 1500. First I changed the VMkernel port, so it would send out 1500 byte packets … no change … Then I changed the MTU for the vSwitch itself to 1500, and voila, connectivity was restored and “alloc_rx_buff_failed” did not increase anymore.

This was easily reproducible

So for some redone, the combination vSphere 5.5 as nested ESXi server and jumbo frames did not work.

Since nested ESXi is not officially supported, opening a case at VMware would probably not help, so I reached out to “Mister nested ESXi” William Lam to see if he had experienced the same kind of issues in his nested labs. He told me he had not seen this before. We exchanged some information and William asked what NIC I had configured for the nested ESXi VM’s. I was using the good old e1000 NIC. William suggested to try either the e1000e or the vmxnet3 adapter.

The e1000e adapter can easily be configured when the guest OS type is set to “VMware ESXi 5.x” To configure the vmxnet3 adapter, you need to edit the vmx file, or change the guest OS type temporarily.

I thought it would not hurt to try this, and indeed, when using the e1000e or the vmxnet3 adapter, I could configure jumbo frames without any issues, so it seems the issues I did experience where specific to the e1000 driver.

I do know VMware only offers the e1000 driver since this driver is supported out-of-the-box in most OSes, and advises to use either e1000e or vmxnet3, but never expected something most likely changed in this driver that has been around for several years, that suddenly broke my setup after upgrading from 5.1u1 to 5.5 …

So at least I was able to work around this issue and am able to use jumbo frames again in my nested lab. I am still kind of curious what changed in the e1000 driver or the ESXi layer that caused this issue in 5.5, compared to 5.1 where the issue did not happen.

I tried to figure out if there is a way to set a higher debug level fro the e1000 NIC (like on several other drivers) but without succes so far.

A special thanks to William whom took some time to exchange thoughts on tho subject and pointed me in a direction to a solution.

IMPORTANT UPDATE: When using vmxnet3 adapters for the nested ESXi hosts, even though 8972 byte icmp packets made it to the outside world, TCP sessions did not seem to function OK. This resulted in loss of access to the Datastores and even the nested ESXi server.

I was not able to get to the bottom of this, but found out that using the e1000e adapter instead, I can use jumbo frames and there are no issues with connectivity.

The e1000e is a “supported” adapter when the Guest OS type is set to ESX 5.x so you will not get the warnings during a vMotion of the nested ESXi server that the NIC is not supported as an ext a bonus.

So summarized:

- When using e1000 vmmic, severe issues when 9000 byte MTU is configured

- When using vmxnet3 vmmic, tcp sessions seem to fail

- When using e1000e vmmic, all seems to work like it did in 5.1

Thanks for sharing this. I just found out the hard way and it’s great the solution worked, save me a couple of hours ;-). Cheers Duco!

Good to hear Bouke, thanks

Seriously good post. First, it clearly solved my bewildering problem. Second, nice methodical discussion of the issue and resolution. Thanks for taking the time.

Thanks Joel, glad it solved your problem.