Yesterday my good friend Gabrie van Zanten from Gabes Virtual World asked the following question on twitter:

How can I disable VAAI in vSphere5 on a per array basis? KB1033665 is not the solution #EMC #CX4 #VMware Dont want to disable for my VNX

— Gabrie (@gabvirtualworld) 18 juni 2012

My first reaction was “Why would Gabe want to disable VAAI on a per array basis isn the first place?” so I asked.

His answer was pretty simple and straight forward. He was working on an environment where ESX5 hosts had both EMC CX4s and VNXes connected, and VAAI was not supported on vSphere 5 for CX4, so he had to disable VAAI for the CX4’s and wanted to leave it on for the VNXes.

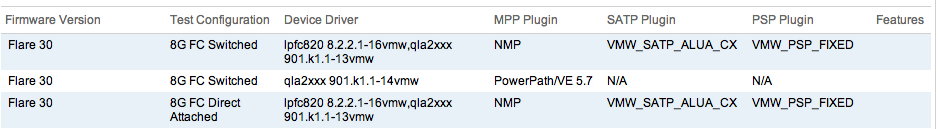

I have been working with EMC CX4 in vSphere 4 environments on several occations, and must shamefully admit I was not aware EMC dropped support fror VAAI on the CX4 for vSphere 5. I checked VMware’s HCL and only when I compared support for VAAI on the VNX I noticed the output for the HCL for VAAI on CX4 was slightly different:

vSphere 5, VAAI and CX4:

vSphere 5, VAAI and VNX:

Kind of looks the same, but the output for the VNX shows a “View” link in the features column. When clicking on the View link you get:

So that is where it says the VAAI-Block features Block Zero, Full Copy, HW Assisted Locking and Thin Provisioning are supported on the VNX, and that info is missing for the CX4. In the VMware knowledge base I found KB article 2008822 which clearly states:

EMC CLARiiON CX4 series arrays are not currently supported for VAAI Block Hardware Acceleration Support on vSphere ESXi 5.0.

See this brief matrix of current VAAI support for EMC CLARiiON and VNX arrays:

| vSphere 4.1 | vSphere 5.0 | |

| CX4 Series Flare 30/29/28+ | VAAI Supported | Not Supported |

| VNX Series OE 31 or Later * | VAAI Supported | VAAI Supported |

So indeed, VAAI is not supported on CX4 when using vSphere 5.0

HCL lacks the same features links when looking at 5.0 Update 1 so I assume the KB article is also applicable for vSphere 5.0U1

So now I know why Gabe was looking for a way to disable VAAI on a per array base in an ESX5 cluster, so he could still use VAAI on hist VNXes, but disable it on his CX4s.

Now I hear you say, who cares, just disable VAAI completely, but this is where it gets interesting. I think Gabe could live without the Block Zero and Full Copy features of VAAI, but the HW Assisted Locking is a completely different story. We all do remember the storage world for vSphere can be divided in two eras, lets call them BV and AV (Before VAAI and After VAAI. Before VAAI, we had a thing called SCSI reservations. Different ESX hosts accessing the same shared VMFS volume used SCSI reservations to briefly lock the LUN to do metadata updates on the LUN. This was OK back then, but had as a side effect that when you put to many VMs on a LUN, you had too many SCSI reservations which had a negative impact on performance. This was the reason almost all storage vendors had best practices on LUN sizes and maximum number of VM’s per Datastore.

But then a new era started, the AV era. VAAI used ATS (Atomic Test and Set) to only lock the blocks on a LUN it wanted to do the metadata update on, and not on the entire LUN. So this completely changed the design guidelines for LUN’s in a vSphere environment. (Off course there are a lot more things you need to keep in mind when designing your LUNs, but lets keep it simple for now) So when there where no other constraints, it was perfectly OK on vSphere 4 to create a 2TB-512 bytes LUN, format it with VMFS, and load it with lots of VMs, and that is exactly what a lot of folks did. For a CX4 you had to set failover mode to 4 for the ESX hosts, present the 2TB-512 bytes LUN’s to your hosts, and ATS would make sure you did not run in to any SCSI reservation issues.

And then vSphere 5 came around … We all know how this works, update vCenter, update Update Manager, import the ESX5 iso, and update your hosts … And suddenly you end up with a non supported environment …

OK, so to get my environment supported again, I just disable VAAI on the ESX hosts? Youd could, but suddenly you would be back to the BV era and could potentially run in to the previously mentioned issues with SCSI reservations … And the only way to fix that, would be to create new smaller LUN’s and migrate your VM’s to these smaller LUNs. (If you do an upgrade to VMFS5 it’s a good idea to create new LUNs anyway to make sure you have a consistent block size on all your VMFS5 LUNs, but thats a separate issue)

An interesting question I have here, is how can it be that when I upgrade an ESX4 host to ESX5, ESX5 defaults to using VAAI on the CX4 even though it is not supported?

I am pretty sure zillions of folks who did the upgrade where not aware they ended up in an unsupported configuration after the upgrade.

So there are two easy ways to disable VAAI on a CX4:

- Disable VAAI on the ESX Host, see VMware KB articles 1033665 and 2007472 to disable all VAAI functions on the host

- Configure the host on the CX4 for failover mode 1 (the classic failover mode for ESX hosts, where the LUN is presented to the ESX servers in non ALUA mode, and where VAAI is not used. drawback for this failover mode is you fall back to the MRU path selection policy for an active/passive array)

# esxcli storage core claimrule list –claimrule-class=Filter

Rule Class Rule Class Type Plugin Matches

———- —– ——- —— ———– —————————-

Filter 65429 runtime vendor VAAI_FILTER vendor=MSFT model=Virtual HD

Filter 65429 file vendor VAAI_FILTER vendor=MSFT model=Virtual HD

Filter 65430 runtime vendor VAAI_FILTER vendor=EMC model=SYMMETRIX

Filter 65430 file vendor VAAI_FILTER vendor=EMC model=SYMMETRIX

Filter 65431 runtime vendor VAAI_FILTER vendor=DGC model=*

Filter 65431 file vendor VAAI_FILTER vendor=DGC model=*

Filter 65432 runtime vendor VAAI_FILTER vendor=EQLOGIC model=*

Filter 65432 file vendor VAAI_FILTER vendor=EQLOGIC model=*

Filter 65433 runtime vendor VAAI_FILTER vendor=NETAPP model=*

Filter 65433 file vendor VAAI_FILTER vendor=NETAPP model=*

Filter 65434 runtime vendor VAAI_FILTER vendor=HITACHI model=*

Filter 65434 file vendor VAAI_FILTER vendor=HITACHI model=*

Filter 65435 runtime vendor VAAI_FILTER vendor=LEFTHAND model=*

Filter 65435 file vendor VAAI_FILTER vendor=LEFTHAND model=*

And for the VAAI class they are:

# esxcli storage core claimrule list –claimrule-class=VAAI

Rule Class Rule Class Type Plugin Matches

———- —– ——- —— —————- —————————-

VAAI 65429 runtime vendor VMW_VAAIP_MASK vendor=MSFT model=Virtual HD

VAAI 65429 file vendor VMW_VAAIP_MASK vendor=MSFT model=Virtual HD

VAAI 65430 runtime vendor VMW_VAAIP_SYMM vendor=EMC model=SYMMETRIX

VAAI 65430 file vendor VMW_VAAIP_SYMM vendor=EMC model=SYMMETRIX

VAAI 65431 runtime vendor VMW_VAAIP_CX vendor=DGC model=*

VAAI 65431 file vendor VMW_VAAIP_CX vendor=DGC model=*

VAAI 65432 runtime vendor VMW_VAAIP_EQL vendor=EQLOGIC model=*

VAAI 65432 file vendor VMW_VAAIP_EQL vendor=EQLOGIC model=*

VAAI 65433 runtime vendor VMW_VAAIP_NETAPP vendor=NETAPP model=*

VAAI 65433 file vendor VMW_VAAIP_NETAPP vendor=NETAPP model=*

VAAI 65434 runtime vendor VMW_VAAIP_HDS vendor=HITACHI model=*

VAAI 65434 file vendor VMW_VAAIP_HDS vendor=HITACHI model=*

VAAI 65435 runtime vendor VMW_VAAIP_LHN vendor=LEFTHAND model=*

VAAI 65435 file vendor VMW_VAAIP_LHN vendor=LEFTHAND model=*

So if a CX would identify itself differently from a VNX to the host, we could be on the right track. As you can see, both classes of claim rules match on a vendor and on a model. For both a CX4 and a VNX, the vendor is DGC, so no differentiator there. For the model it would be nice if both types of arrays would choose a different model we could filter on, but unfortunately, EMC chose to send a more generic model string to the host, basically being the RAID level for Raid Group LUNs and VRAID for Pool LUNs, both for the CX4 and the VNX ….

The output for both a CX4 and a VNX with a LUN from a Raid Groups shows:

# esxcli storage core device list

<SNIP>

Vendor: DGC

Model: RAID 5

<SNIP>

For a LUN from a pool they both show:

# esxcli storage core device list

<SNIP>

Vendor: DGC

Model: VRAID

So based on the Vendor and the Model you can not distinguish between a CX4 and a VNX, if both use either Raid Group LUNs, Pool LUNs or a mix.

But on the other hand, say your CX4s use Raid Group LUNs and your VNXes use Pool LUNs, changing the claim rules might actually get this done

In that case you should be able to change both the Filter class rule and VAAI class rule to:

Filter 65431 file vendor VAAI_FILTER vendor=DGC model=V*

and

VAAI 65431 file vendor VMW_VAAIP_CX vendor=DGC model=V*

so they both match on the VRAID model the VNX presents and not to the RAID 5 model the CX4 presents.

Please keep in mind this is a proof of concept, and I have not been able to test this.

So for now, I think the failover mode 1 on the CX4 option is the only supported way to accomplish this, with as negative side effect that MRU will be used and Round Robin path selection for instance is not an option with MRU. And for now, if the CX4 does not get supported on vSphere 5 with VAAI, you might need to go back to using smaller LUN’s since you can not use ATS but have to fall back to SCSI reservations.

I reached out to some contacts within the EMC organisation to figure out what their suggestion would be to address these issues, and will update my blog when I get any answers.

One suggestion I have for EMC that would give us the ability to distinguish between a CX4 and a VNX on the ESX host would be to change the model string a VNX sends to the ESX hosts from info on the type of RAID set it uses to a string based on the actual type of the array.

Optimistic Locking?

I hardly seen people hitting issues around SCSI reservations in the last 2 years to be honest. Those who were had snapshots enabled on 30 VMs on a single VMFS volume 🙂

http://blogs.vmware.com/vsphere/2012/05/vmfs-locking-uncovered.html

Good point, but that might change when people start using 64TB VMFS volumes (not that that is a good idea, just as an example what people will do, simply because they can …) Just tried to point out there could be consequences when upgrading to ESX5 with the current lack of support for VAAI on CX4.

Hi Duncan,

this really depends on what type of storage arrays and storage virtualization layer products you use. In the past – when using HDS based arrays – and even with optimistic locking we saw VMKernel.log messages stating that VMFS file locks were held longer than expected (no real SCSI reservation *conflicts*, but timing issues that certainly influenced storage performance), although we limited the number of hosts per cluster to only 6! With VAAI this was gone, and I expect that the ATS improvements in vSphere 5.0 will mitigate this even further.

So, VAAI does make a real difference, and I don’t want to do without it anymore.

– Andreas

Hi Duco,

I blogged about this issue a while ago already. In the end it turned out that EMC would support VAAI for the CX4 even when running vSphere 5.0 if you file an individual RPQ with them. We have it running in production now without any problems . Read the whole story here:

http://v-front.blogspot.de/2012/05/whats-deal-with-emc-cx-arrays-not.html

– Andreas

Thanks Andreas, great post, had not seen it yet. In the end it indeed seems to be a matter of just pushing the CX4 through the VMware qualification process, since the fact EMC does support it in an RPQ, it seems there is no technical reason not to support it. But the current situation it is not supported by VMware because EMC did not put it trough the qualification process, and the fact EMC in fact does support it, causes a lot of confusion in my opinion. Either it is supported by both VMware and EMC, or it is not.

VMware’s statement according to this is: “If you have an RPQ with EMC we will also support this configuration.”

Damn! … they should just properly modify and re-run the qualification tests, make it pass, and then officially support this …

+1

Good indepth article, we have here also a mixed environment with a CX4 and a VNX and I wasn’t aware that the CX4 isn’t VAAI supported anymore with Vsphere 5 anymore.

Do you know also know why the CX4 isn’t supported anymore? Is the VAAI implementation on the CX4 to old for Vsphere5?

oh, did a refresh and I see the answer…

I think that is the million dolar question only EMC can answer …

Hi Duco,

Good reading, thanks for sharing.

I have just finished upgrading an environment to vSphere 5.x with EMC CX4-120 and Equallogic arrays. Now I know what to do.

Best Regards,

Paul